Scaling kdb+ Architecture

Contents

Scaling kdb+ Tick Architecture

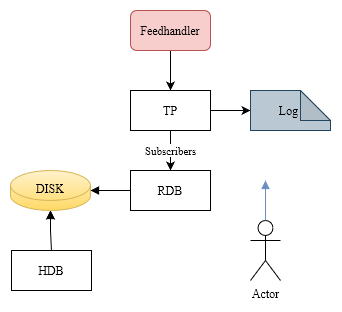

Storing - One Database of Market Data

- Problem: Storing market data

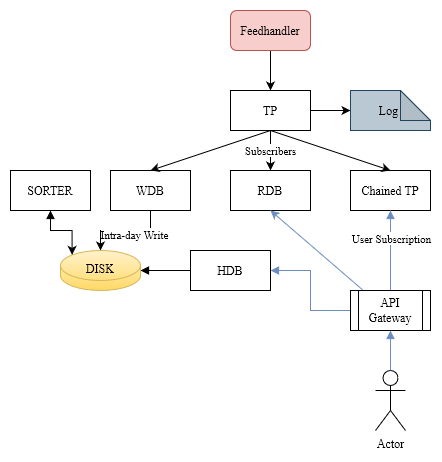

- FH – FeedHadnler - Processes data

- TP – TickerPlant - Logs to disk, Forwards to listeners

- RDB - Realtime-DataBase - Keeps recent in RAM, Answers user queries

- HDB - Historical DataBase - Provides access to historical data from persistent storage

Scaling Out Tick

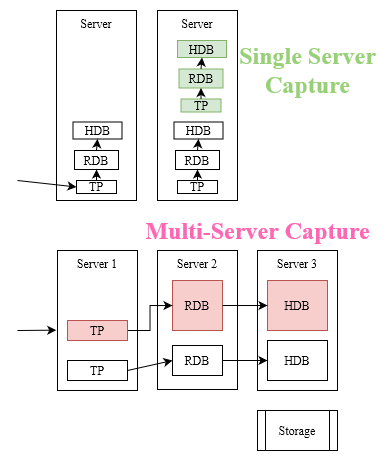

How are we going to scale and deploy Tick?

Problem: Reasonably allowing multiple databases

Very early on you will need to make some long term decisions. I’m not going to try and say what is best as I don’t believe that’s possible. What I would like to suggest is a framework for deciding. As a team, rather than arguing A against B. Try to decide what parameters would cause a tipping point.

- Pivot Points for:

- Storage - DDN / Raid / SSD / S3

- Server-Split - Efficiency, reliability

- Launcher - Kubernetes, Bash, SSH

- Cloud vs On-Prem

Considering Storage

- Do NOT pose the question as SAN or DDN. Ask what is the tipping point?

- What is available within the firm?

- How much effort to onboard external solution? For what benefit?

- Tipping point – If need very fast local SSD else RAID. As RAID provides reliability and easier failover.

Tick+ - Strengthening the Core Capture

Problem: You scaled out but now you can’t manually jump in and fix issues yourself. You need to automate improvements to the core stack.

These are issues that everyone encounters and there are well documented solutions.

- Slow consumer risks TP -> Chained TP. Cutoff slow subscribers

- End of day Savedown blocks queries -> WDB

- Persist data throughout day to temp location

- Sort slow = perform offline

- Long user queries block HDB -> -T timeouts

- Bad user queries -> GW + -g + auto-restart

- High TP/RDB CPU - batching

Growing Pains

Problem: We scaled out even further and now processes are everywhere

- We need security everywhere

- Some databases are too large for one machine

- Users querying host:port RDB/HDB direct

- Won’t work if server dies

- Requires expertise

- Users can’t find data

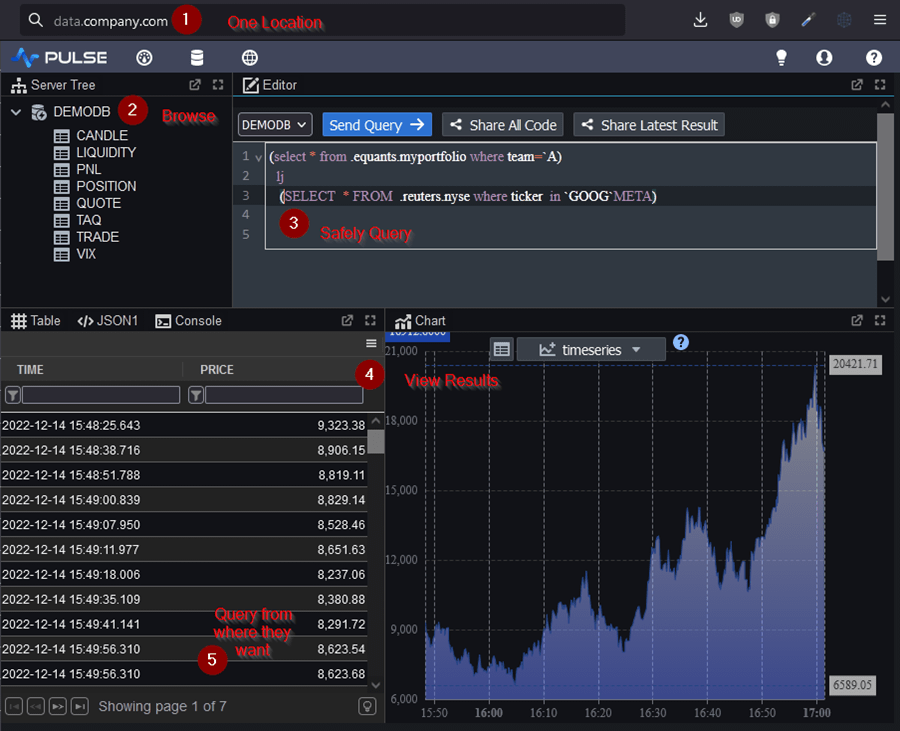

The UI Users Want

Essential Components

For many reasons we need to know where all components are running and we need that info to be accessible. Let's introduce two components to achieve that:

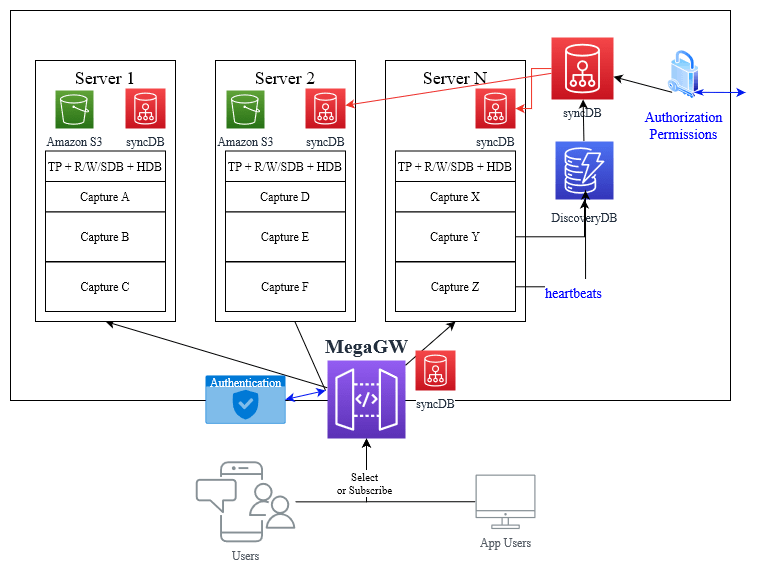

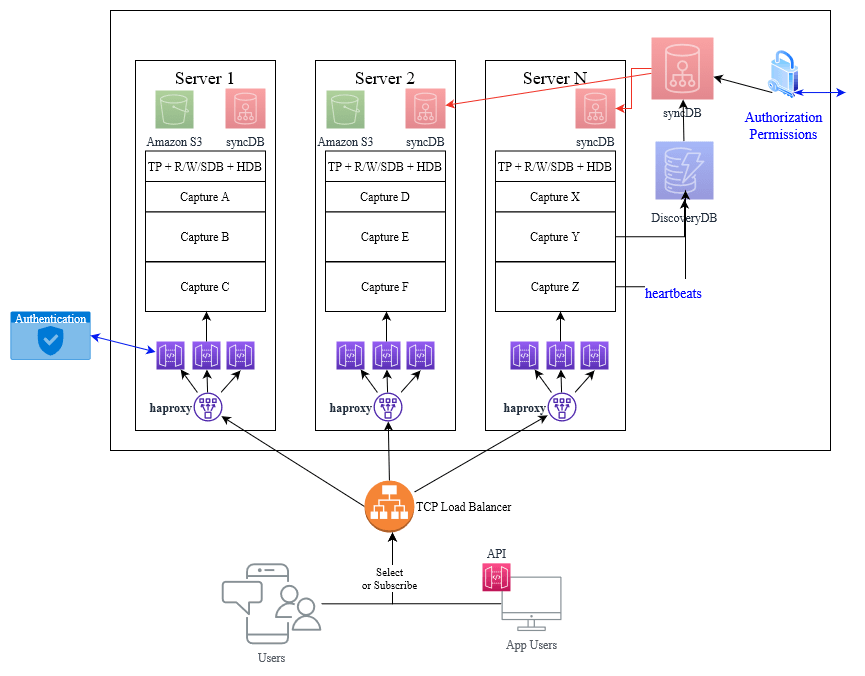

DiscoveryDB

My preference is to use kdb as it seems well suited and the team has expertise. So now when every process starts it will send a regular heartbeat to one or more discovery databases. On a separate timer, the DiscoveryDB will publish the list of existing services to syncDB.

syncDB

Will have a master process, that then sends updates to all servers and downstream processes. When any capture on a server wants to query a sync setting, it calls the API which looks at the most local instance only. It’s designed to NOT be fast but to be hyper reliable. We are not spinning up/down instances every minute. These are mostly databases that sit on large data stores so caching them for long periods is fine. Worst case we query a non-existant server and timeout. What we don’t do is delete or flush the cache.

Mega-Gateway - MegaGW

It can take a user query, see where it needs sent to, send queries to that location and return the result. We can make one process like that but how do we scale it up to 100s of users while looking like one address? I don’t have time to go into every variation I’ve seen or considered so I’ll just show one possibility:

Starting from the User Query:

- They connect to a domain name, let’s say data.company.com

- That is a TCP load balancer, that rotates over multiple servers. So it connects to port 9000 on server 1

- Running on port 9000 on server 1 is another load balancer, software based haproxy, which will forward/return data from one of the underlying q processes.

- So let’s say between port 9000-9010 – we are running 10 megagw processes

- Each MegaGW is a q process that has a table listing all the data sources

- We override .z.pg to break apart the user query and forward it to the relevant machines and return the result.

The nice thing here is that we’ve used off-the-shelf components, there is NO-CODE. The best code is no-code. The load balancers only knows it’s forwarding connections. Meanwhile we can program the MegaGW as if it’s just one small component. It will behave to the user exactly like a standard q process, meaning all the existing API and tools like QStudio will just work.

Future Work

- Mega-Selects – beautiful user API

- Sharding – captures larger than 1 machine

- Middleware – IPC or solace or kafka

- User Sandboxes

- Hosted API – Allowing other teams to define kdb APIs in megaGW safely

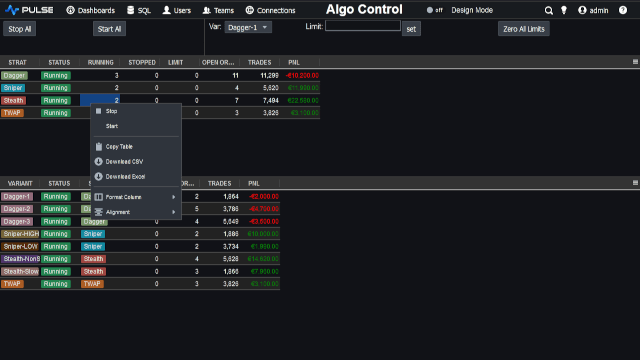

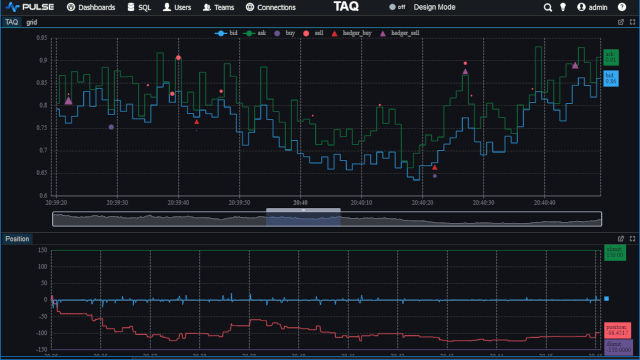

- Apps – As well as captures, host RTEs

- Failover

- Regional – hosted databases

- Improved Load-balancing. Persistent connections.