Pulse 3.36 – Grid Icons Images

Pulse 3.36 Adds support for icons and images within tables:

Demonstrated in the video and multi-column sorting and real-time filtering.

Pulse 3.36 Adds support for icons and images within tables:

Demonstrated in the video and multi-column sorting and real-time filtering.

Pulse 3.28 is now released. (Release notes)

You can now add table footers that show aggregated views of the data including average, count and sum.

The aggregation function for a given column can be chosen within the column specific configuration:

Allow users to get an overview of the data in one column. How many unique values does it contain, nulls, minimum, maximum etc.

Other additions include:

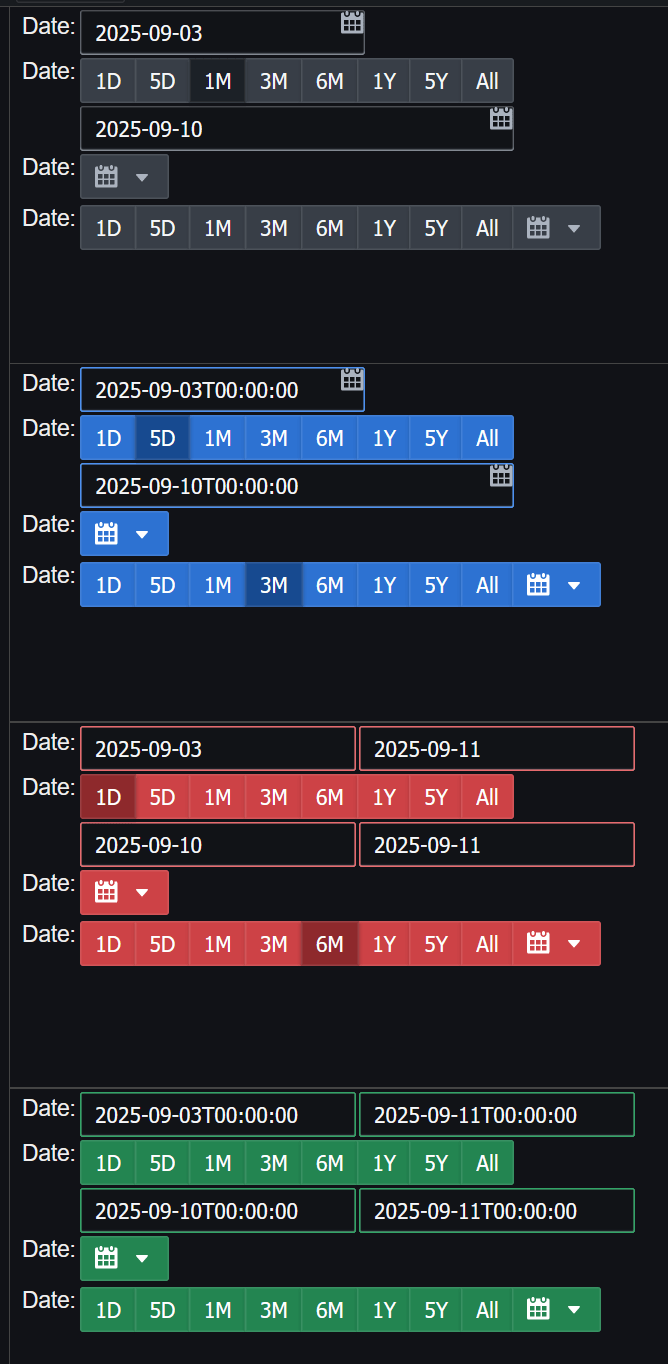

Pulse 3.25 released with Conditional formatting, a new form creator and new date picker widgets:

__ALL__ for when there are lots of options.Releasing dashboards from file to allow git file based deploys:

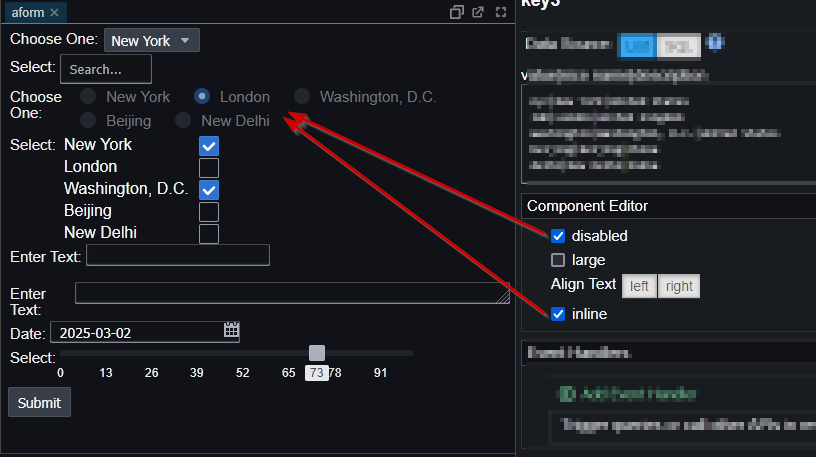

Improved Form Customization with radio/checkbox inline/disabled/large options. Allow specifying step size and labels for numeric slider.

Send {{ALL}} variables to kdb+ as a nested dictionary:

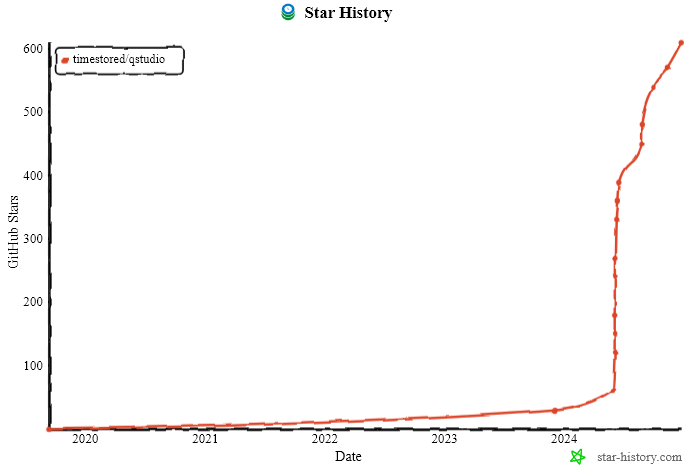

2024 has been a good year with new major versions of both QStudio and Pulse released. 1000s of new users using our tools and we continue to release regularly and keep improving. Thanks go to our users for raising issues, providing feedback and commercially backing us.

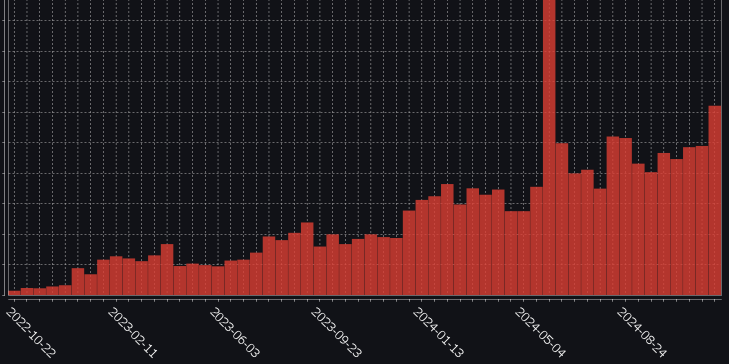

Admittedly we weren’t trying to get github attnetion for the first 10 years so it’s a low start but we’ve made good progress.

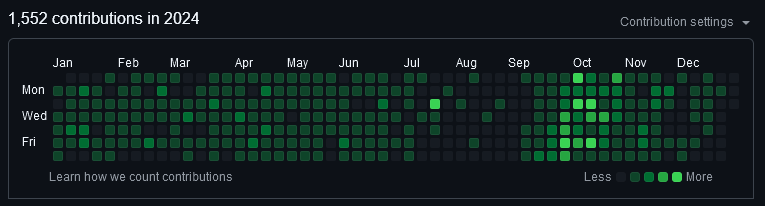

It looks like a quick holiday from coding in August, then coming back with fresh ideas and heads-down in October to get Notebooks released.

In case you are wondering the 1 fortnight where data is off the chart was this hacker news post.

We used https://notes.cleve.ai/unwrapped to generate this calendar of our major linkedin posts:

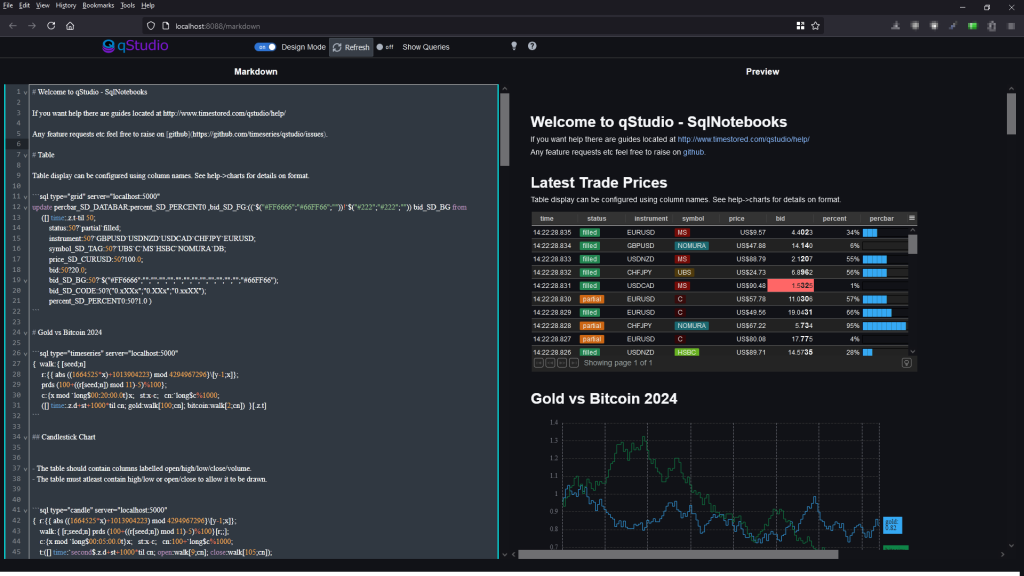

Want to create beautiful live updating SQL notebooks?

While being able to easily source control the code?

and take static snapshots to share with colleagues that don’t have database access?

Today we launched exactly what you need and it’s available in both:

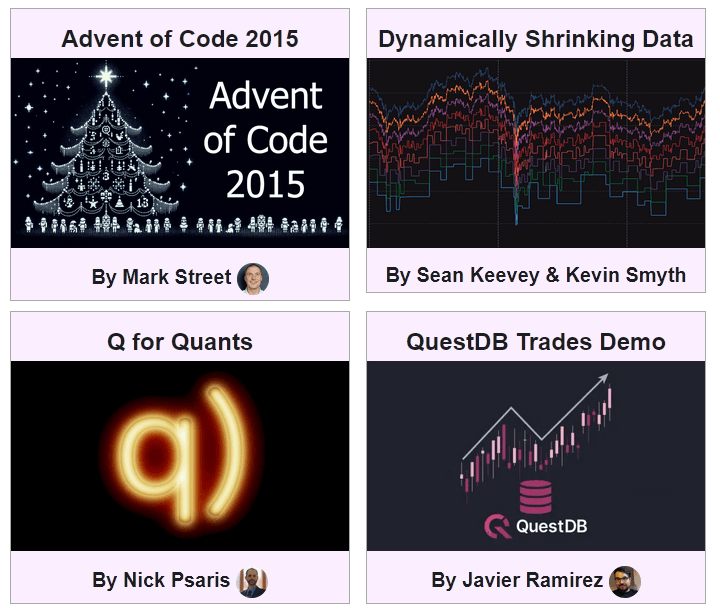

We have worked with leading members of the community to create a showcase of examples.

These are snapshotted versions with static data. The source markdown and most the data to recreate them are available on github.

Let us know what you think, please report any issues, feature suggestions or bugs on our github QStudio issue or Pulse issue tracker.

Thanks to everyone that made this possible. Particularly Brian Luft, Rich Brown, Javier Ramirez, Alexander Unterrainer, Mark Street, James Galligan, Sean Keevey, Kevin Smyth, KX, Nick Psaris and QuestDB.

It’s approaching 2 years since we launched Pulse and it’s a privilege to continue to listen to users and improve the tool to deliver more for them. A massive thanks to everyone that has joined us on the journey. This includes our free users, who have provided a huge amount of feedback. We are commited to maintaining a free version forever.

We want to keep moving at speed to enable you to build the best data applications.

Below are some features we have added recently.

Pulse enables authors to simply write a select query, then choose columns for group-by, pivot and aggregation. Users can then change the pivoted columns to get different views of the data. The really technical cool part is:

We just launched a new sql documentation website: sqldock.com

to allow integration with Pulse / qStudio and docs more easily.

More updates on this integration will be announced shortly. 🙂

We have been working on version 2.0 of Pulse with a select group of advance users for weeks now. To give you a preview of one new feature, check out markers shown on the chart below. We have marker points, lines and areas.For example this will allow adding a news event to a line showing a stock price. This together with many other changes should be released soon as part of 2.0.

If this is something that interests you, message me.

Particularly if you have tried other notebooks and hold strong opinions 😡 .